Neural networks: The challenges of object recognition

Recognizing different vehicles digitally is a challenge in itself. Doing this in real time: even more so. Dr. Claus Lenz from Providentia++ partner Cognition Factory on the pitfalls of object recognition on the A9 highway.

Mr. Lenz, in your bachelor’s thesis you dealt with speech recognition, and for your master’s thesis you developed an automatism for videos that generates a recording that contains the essentials. Now, for Providentia++, the focus is on identifying vehicles. How are all of these things related to each other?

I focused on human-machine communication in my studies. For my diploma thesis I worked with an “Asynchronous Hidden Markov Model.” Today you would probably call it artificial intelligence. For my doctorate I concentrated on human-robot collaboration in the research project CoTeSys, where the focus was on how humans and robots solve production tasks together. What all of these tasks have in common is that a machine is taught step by step to learn and to solve a task independently. First it was about recognizing speech, then about identifying important passages in videos. Now, in Providentia++, it is about distinguishing different classes of vehicles from each other as reliably as possible. The acquired data is analyzed and, ideally, recommendations are derived from it.

How are you going about this in Providentia++?

Unlike in the basic research, we are using solutions that are already established – in other words, using architectures that have already been developed and pretrained. We first work with standard data sets and then continuously improve the system with our own data. One of the main tasks in this area is always to find both suitable approaches and suitable data sets and to prepare them for the algorithms. For example, at the beginning of the project, there was a great deal of data from the perspective of a vehicle, such as for training assistance systems. However, there was very little monitoring data, that is, data collected from outside and above the vehicle.

How did you teach the system to distinguish between cars, trucks, and delivery vehicles?

As I said before, the goal is to combine the right approaches with suitable data sets. This means that if there is not enough data available from the application, new data sets have to be created. This often involves a lot of manual work, because the images have to be annotated. This means that information is added to an image. We speak of “labeling” when a rectangle is drawn around a vehicle, for example. Each individual annotation helps the system to learn, and over time it will be able to distinguish better and better between vehicle classes. After a certain time, this can be done very precisely. However, if the system has to decide in fractions of a second, that is, make the right decision in real time, errors can never be completely avoided. So it can happen that vehicles are not immediately clearly assigned to a class. This is where additional sources of information such as radar sensors or the time sequence – the tracking of the vehicles over time – help us. Generally speaking, systems are either fast and rather inaccurate or slow and precise. So the challenge in Providentia++ is to find a balance.

The weather and lighting conditions also play a role in the quality of object recognition. How can these factors be dealt with?

The quality of object recognition largely depends on three factors: the hardware (the sensors and accompanying data quality); the software (the robustness of the available algorithms); and the environment (weather conditions, for example). Reflections, scattered light, or haloes around light sources can hardly be avoided during snow or heavy rain. And if just one cloud passes by, the image quality can be impaired by noise, which affects recognition. At present, our algorithms generally detect cars better than trucks or buses – simply because more and better data is available for them. Standard data for trucks and buses, for example, often comes from the USA or Asia, where the vehicles look slightly different than in Europe. So there is still a lack of “teaching material” here. We can collect the information we need from the Providentia++ cameras.

Object recognition can be used to classify vehicles and track their routes. What analyses are feasible on the basis of this data?

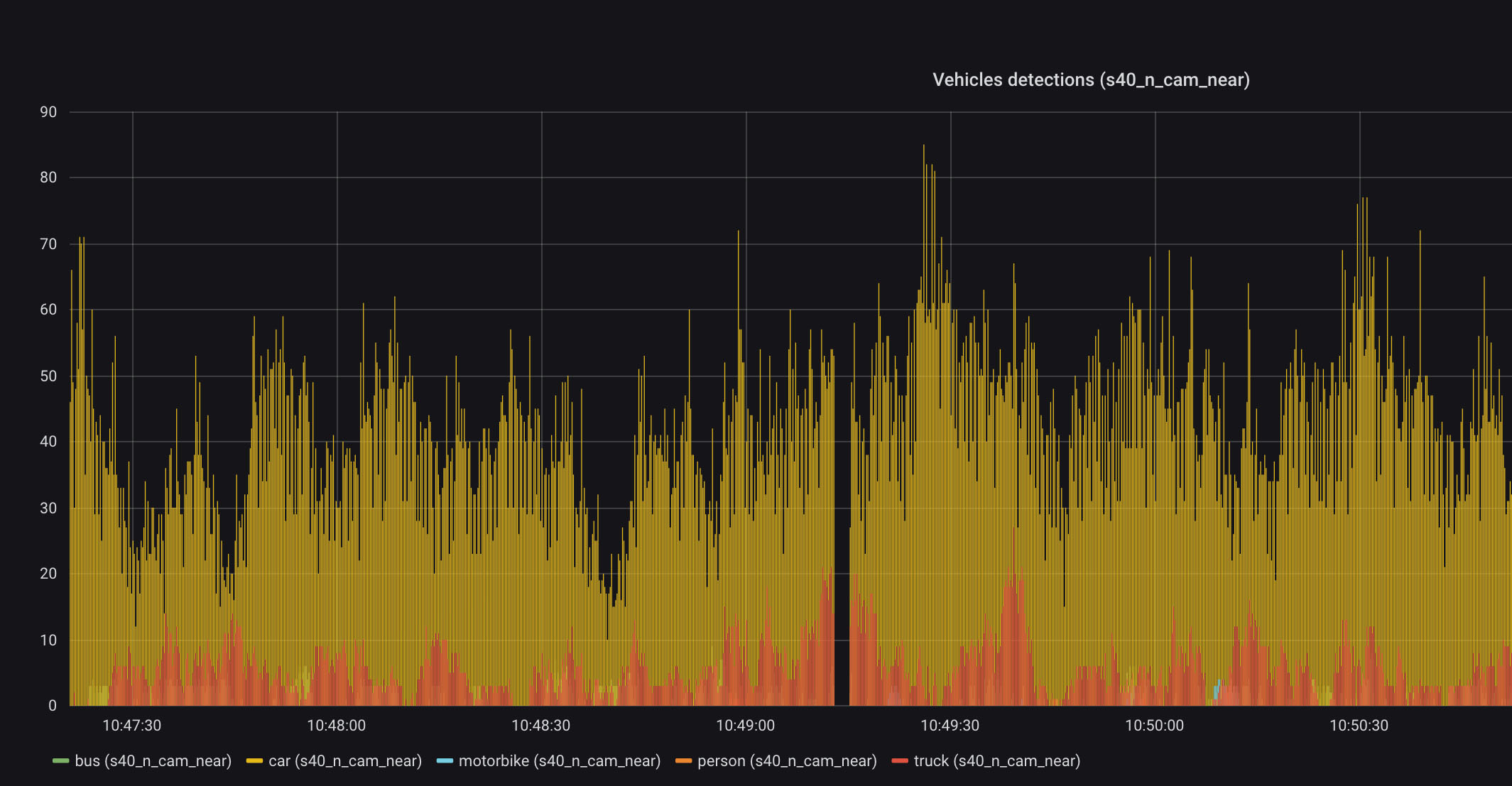

With our algorithms, we are able to take a snapshot of traffic about 20 times per second and store this data for one hundred days. It is therefore possible to find out how many cars, trucks, and buses drove past Garching on October 22, 2020, at 12:21 on their way from Munich to Nuremberg. However, it is much more exciting to analyze which lanes are particularly busy, for example, and to derive maneuver recommendations from this. To pursue the following question: At what traffic density are lanes changed frequently, and how can this be prevented?

Is it possible to recognize special makes of cars, or to distinguish between electric vehicles and those with internal combustion engines?

In principle, yes. However, our research in the field of car make and model recognition has shown that determining the exact vehicle model still takes too long for real-time processing: The cameras see between 50 and 100 vehicles in a single image, and each of these must be analyzed. For the applications planned in Providentia++, it is initially sufficient to classify the vehicles into primary categories such as car, truck, motorcycle, or bus. In this way we also guarantee a continuous processing of the data in real time.

In the next phase of Providentia++, traffic in urban spaces will also be studied. This means that pedestrians, cyclists, and motorcycles, for example, will be included. How are you approaching this?

We designed the architecture of our system in such a way that we can always add new functionalities. The recognition of people, for instance, has already been integrated. For example, people in vehicles are recognized, and motorcycles and people are classified separately. Artificial neural networks develop their particular strength precisely when they are used in a limited, clearly defined area. It is therefore best to use separate algorithms for the recognition of bicycles, pedestrians, and vehicles, and to then combine them at the end.

Note: In the field of image recognition, so-called convolutional neural networks are often used, whereby filters are applied over the entire image and analyze it piece by piece. This allows the characteristics of the image to be extracted and then classified. Further information on the method of convolutional neural networks can be found in the white paper “A Non-Technical Survey on Deep Convolutional Neural Network Architectures,” by Felix Altenberger and Dr. Claus Lenz, 2018.

FURTHER CURRENT TOPICS

Cognition Factory: Evaluate and visualize camera data

Since the beginning of research on the digital twin, AI specialist Cognition Factory GmbH has focused on processing camera data. In the meantime Dr. Claus Lenz has deployed a large-scale platform

Digital real-time twin of traffic: ready for series production

Expand the test track, deploy new sensors, decentralize software architecture, fuse sensor data for 24/7 operation of a real-time digital twin, and make data packets public: TU Munich has decisively advanced the Providentia++ research project.

Elektrobit: Coining Test Lab to stationary data

Elektrobit lays the foundation for Big Data evaluations of traffic data. Simon Tiedemann on the developments in P++.